Migrating to MRTK2 - setting up and understanding Eye Tracking

Intro

One of the exiting features HoloLens 2 brings us, is Eye Tracking. On HoloLens 1, you had to move your whole head to move the gaze cursor. and while that works well enough for a lot of applications and it seems most people pretty quickly got used to it, mother Nature has equipped us with roving eyes. HoloLens 2, when calibrated for Eye Tracking, can actually track what your are looking at, not merely where your head is pointed at.

Although there is a nice demo in the Mixed Reality Toolkit 2, it took me a while to find out how all the events actually work and need to be hooked up to get it to work consistently. So I made a little demo that works like this:

Events and tracking them

The little blue globe is the target, is equipped with and EyeTrackingTarget script from the MRTK2 that supports five events, which you can see going off as the red spheres turns green

- LS: On Look At Start

- WL: While Looking At Target

- LA: On Look Away

- DW: On Dwell

- S: On Selected

The EyeTrackingTarget is configured as follows:

![]()

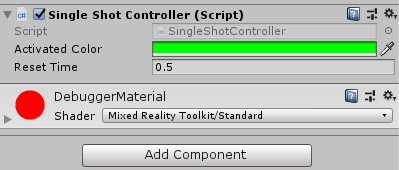

![]() In the scene, the whole thing showing the images (the five little red-turning-green globes with labels) is one prefab containing 5 little spheres with a label above it - each a prefab on its own. Every sphere has a "Single Shot Controller" script that turns it's sphere green for 0.5 seconds when an event is called.

In the scene, the whole thing showing the images (the five little red-turning-green globes with labels) is one prefab containing 5 little spheres with a label above it - each a prefab on its own. Every sphere has a "Single Shot Controller" script that turns it's sphere green for 0.5 seconds when an event is called.

It's a super simple script, the interesting part is even shorter:

public void ShowActivated()

{

_timeActivated = Time.time;

}

void Update()

{

var desiredColor = Time.time - _timeActivated > _resetTime ?

_originalColor : _activatedColor;

if (_material.color != desiredColor)

{

_material.color = desiredColor;

}

}When ShowActivated is called, the _timeActivated field is set to now. The Update loop then checks every 60th of a second whether it should set the color to red or green, depending on the fact if the latest call to ShowActivated is already half a second ago.

What happens when

The event names are pretty straightforward, and things happen more or less than you expect, although

What is actually happening:

- When the user first looks at the eye tracked object, "On Look Start" is fired once

- While the user keeps looking, "While Looking At Target" keeps being called. Thus, the green sphere stays green. The calling seems to at the same instant - or nearly the same instant - as the previous event

- As soon as the user stops looking at the sphere, "On Look Away" is called and "While Looking At Target" is stopped being called

- "On Dwell" is being called after the time defined in the "Dwell Time in Sec" slider has passed has the user is still looking at the object. I took the ridiculously user-unfriendly time of three seconds to make sure this event was easily distinguishable from the other events. Here's the thing though - it's being called once. That kind of confused me.

- "On Selected" is being called when then object being looked at and you say "Select". This is one of the predefined commands in the default speech commands profile (DefaultMixedRealitySpeechCommandsProfile)

Setting up and configuring eye tracking in profiles

Coming from the default profile, you will need to configure at least profiles, and better still three.

First, you will need to clone the Default Toolkit profile itself. First thing I do, while still in the early phases, is disabling the diagnostics system as I don't want that profiler in my face the whole time:

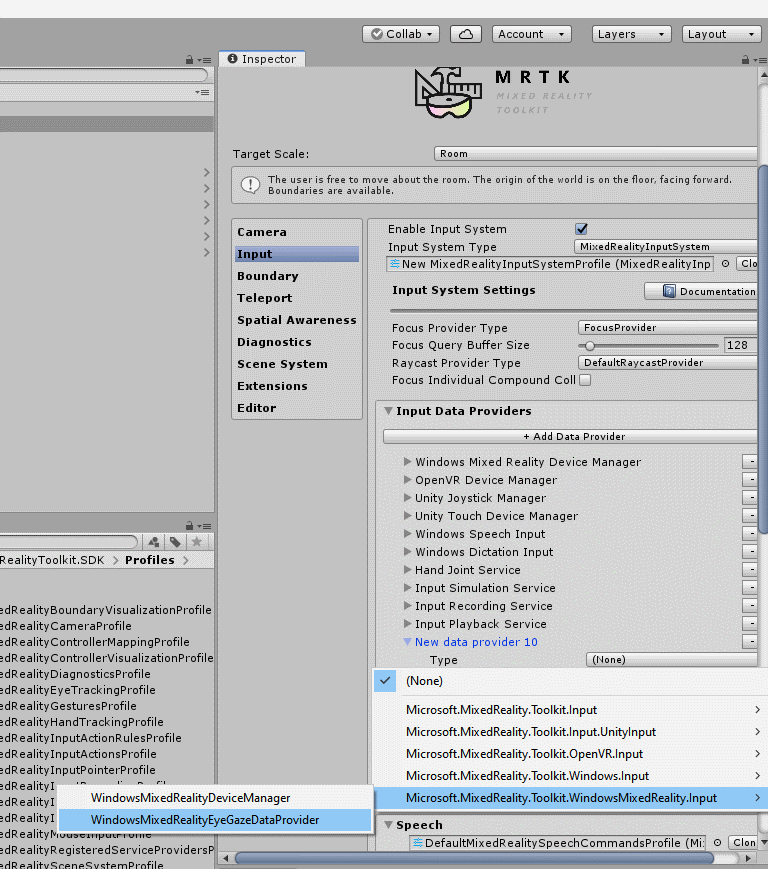

Next, you will have to clone the Input System Profile and add a Windows Mixed Reality Eye Gaze Provider"

As you can see, this sits in namespace "Microsoft.MixedReality.Toolkit.WindowsMixedReality.Input"

And then finally, and optionally, if you want this to work in the editor too, you will have to configure the Input Simulation Service. You do that by cloning the default input system profile and check the "Simulate Eye Position" checkbox

One more thing: setting capabilities

You will notice now the Gaze cursor turning up in the editor so you might thing you are done. Well, almost. There's the small matter of capabilities. C++ or not, the result is still a UWP app, and Gaze Input is a capability that you need to ask consent for. This, unfortunately, is not yet implemented in Unity. So after you generated the C++ app, you will need to open it in Visual Studio, select the Package.appmanifest file, and select there the Gaze Input capability.

You will notice now the Gaze cursor turning up in the editor so you might thing you are done. Well, almost. There's the small matter of capabilities. C++ or not, the result is still a UWP app, and Gaze Input is a capability that you need to ask consent for. This, unfortunately, is not yet implemented in Unity. So after you generated the C++ app, you will need to open it in Visual Studio, select the Package.appmanifest file, and select there the Gaze Input capability.

If you deploy the resulting solution to an emulator (or, if you are one of the lucky ones out there, an actual HoloLens 2) and it asks for your consent, you did it right.

If you deploy the resulting solution to an emulator (or, if you are one of the lucky ones out there, an actual HoloLens 2) and it asks for your consent, you did it right.

Conclusion and final words

Setting up Eye Tracking is not that hard, but it takes a few steps. Mind you the MRTK2 comes with a few profiles that make settings things up easier - I just wrote down the steps from scratch. The demo project shows this in all it's glory ;) and allows you to play with it yourself without having to set it up. Notice there's hardly any code outside of the MRKT2 itself involved - there's only one custom script (my SingleShotController) and that's very simple.

By the way - in my own (so far single) HoloLens 2 app I only use the On While Looking event. This seems to be the most trustworthy. I previous iterations of the MRTK2 and/or the HoloLens emulator the other events did not go off reliably enough (IMHO) to use them for real. Of course, this may all be different now, and most likely is completely different (better) on a real device. We are still waiting for that.

On a final note – leaving the eye cursor visible can be confusing and/or annoying, or so I have been told. So under normal circumstances, it should be turned off – the object being looked should give some indication it is notices being looked at. I have found a way to do this myself, but that’s pretty complex, and just as I was about to blog about that, Julia Schwarz herself added a (better) sample to turn off pointers by code to the main MRTK2 repo.

MVP Profile

MVP Profile

Try my app HoloATC!

Try my app HoloATC!  HoloLens 2

HoloLens 2

Magic Leap 2

Magic Leap 2

Meta Quest

Meta Quest

Android phones

Android phones

Try my app Walk the World!

Try my app Walk the World!  Buy me a drink ;)

Buy me a drink ;)

BlueSky

BlueSky

Mastodon

Mastodon

Discord: LocalJoost#3562

Discord: LocalJoost#3562