Using a messenger to communicate between objects in HoloLens apps

Intro

I know I am past the ‘this has to work one way or the other’ stage of a new development environment when I start spinning off reusable pieces of software. I know that I am really getting comfortable when I am start thinking about architecture and build architectural components.

Yes my friends, I am going to talk architecture today.

Unity3D-spaghetti – I need a messenger

Coming from the clean and well-fleshed out of UWP XAML development using MVVM (more specifically MVVMLight), Unity3D can be a bit overwhelming. Apart from the obvious – the 3D stuff itself - there is no such thing as data binding, there is no templating (not sure how this would translate to a 3D environment anyway) and in samples (including some of my own) components communicate by either getting references to each other by looking in parent or child objects and calling methods in those components. This is a method that breaks as soon as 3D object hierarchies change and it’s very easy to make spaghetti code of epic proportions. Plus, it hard links classes. Especially speech commands come in just ‘somewhere’ and need to go ‘somewhere else’. How lovely it would be to have a kind of messenger. Like the one in MVVMLight. There is a kind of messaging in Unity, but in involves sending messages up or down the 3D object hierarchy. No way to other branches in that big tree of objects without a lot of hoopla. And to make things worse, you need to call methods by (string) name. A very brittle arrangement.

Good artist steal…

I will be honest up front – most of the code in the Messenger class that I show here is stolen. From here, to be precisely. But although it solves one problem – it creates a generic messenger – it still uses strings for event names. So I adapted it quite heavily to use typed parameters, and now – in usage – it very much feels like the MVVMLight messenger. I also made it a HoloToolkit Singleton. I am not going to type out all the details – have a look in the code if you feel inclined to do so. This article concentrates on using it.

So basically, you simply drag this thing anywhere in your object hierarchy – I tend to have a special empty 3D object “Managers” for that in the scene – and then you have the following simple interface:

- To subscribe to a message of MyMessageType, simply write code like this

Messenger.Instance.AddListener<MyMessage>(ProcessMyMessage);

private void ProcessMyMessage(MyMessage msg)

{

//Do something

}

- To be notified to a message of MyMessageType, simply call

Messenger.Instance.Broadcast(new MyMessage());

- To stop being notified of MyMessageType, call

Messenger.Instance.RemoveListener<MyMessage>(ProcessMyMessage);

Example setup usage

I have revisited my good old CubeBouncer, the very first HoloLens app I ever made and wrote about (although I never published it as such) that basically uses everything a HoloLens can do: it uses gaze, gestures, speech recognition, spatial awareness, interaction of Holograms with reality, occlusion, and spatial sound. Looking back at it now it looks a bit clumsy, which is partially because of my vastly increased experience with Unity3D and HoloLens, but also because of the progress of the HoloToolkit. But anyway, I rewrote it using the new HoloToolkit and using the Messenger class as a working demo of the Messenger.

I have revisited my good old CubeBouncer, the very first HoloLens app I ever made and wrote about (although I never published it as such) that basically uses everything a HoloLens can do: it uses gaze, gestures, speech recognition, spatial awareness, interaction of Holograms with reality, occlusion, and spatial sound. Looking back at it now it looks a bit clumsy, which is partially because of my vastly increased experience with Unity3D and HoloLens, but also because of the progress of the HoloToolkit. But anyway, I rewrote it using the new HoloToolkit and using the Messenger class as a working demo of the Messenger.

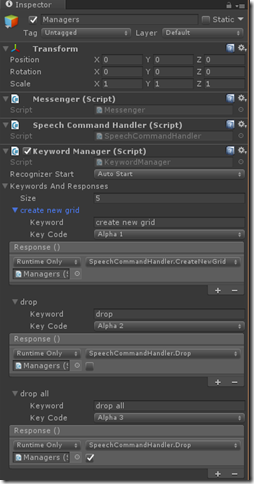

In the Managers object that I use to group, well, manager-like scripts and objects, I have placed the a number of components that basically control the whole app. You see the messenger, a ‘Speech Command Handler’ and a standard HoloToolkit Keyword manager. This is a enormous improvement over building keyword recognizing script manually, as I did in part 4 of the original CubeBouncer series. In case you need info on how the Keyword Manager works, see this post on moving objects by gestures where it plays a supporting role.

Note, by the way, that I also assigned a keyboard key to all speech commands. This enables to test quickly within the Unity3D editor without actually speaking, thus preventing distracting (or getting funny looks and/or remarks) from your colleagues ;).

The SpeechCommandHandler class is really simple

using CubeBouncer.Messages;

using UnityEngine;

using HoloToolkitExtensions.Messaging;

namespace CubeBouncer

{

public class SpeechCommandHandler : MonoBehaviour

{

public void CreateNewGrid()

{

Messenger.Instance.Broadcast(new CreateNewGridMessage());

}

public void Drop(bool all)

{

Messenger.Instance.Broadcast(new DropMessage { All = all });

}

public void Revert(bool all)

{

Messenger.Instance.Broadcast(new RevertMessage { All = all });

}

}

}

It basically forwards all speech commands as messages, for anyone who is interested. Notice now, as well, that in the Keyword Manager both “drop” and “drop all” call the same method, but if you you look at the image above you will see a checkbox that is only selected for ‘drop all’. This is pretty neat, the editor that goes with this component automatically generates UI components for target method parameters.

Indeed, very similar to how it's done in MVVMLight

Example of consuming messages

Now the CubeManager, the thing that creates and manages cubes (it was called “MainStarter” in the original CubeBouncer) is sitting in the HologramCollection object. This is for no other reason than to prove the point that the location of the consumer in the object hierarchy doesn’t matter. This is (now) the only consumer of messages. It's start method goes like this.

void Start()

{

_distanceMeasured = false;

_lastInitTime = Time.time;

_audioSource = GetComponent<AudioSource>();

Messenger.Instance.AddListener<CreateNewGridMessage>(p=> CreateNewGrid());

Messenger.Instance.AddListener<DropMessage>( ProcessDropMessage);

Messenger.Instance.AddListener<RevertMessage>(ProcessRevertMessage);

}

It subscribes to three types of messages. To process those messages, you can either used a Lambda expression or just a regular method, as shown above.

The processing of the message is like this:

public void CreateNewGrid()

{

foreach (var c in _cubes)

{

Destroy(c);

}

_cubes.Clear();

_distanceMeasured = false;

_lastInitTime = Time.time;

}

private void ProcessDropMessage(DropMessage msg)

{

if(msg.All)

{

DropAll();

}

else

{

var lookedAt = GetLookedAtObject();

if( lookedAt != null)

{

lookedAt.Drop();

}

}

}

private void ProcessRevertMessage(RevertMessage msg)

{

if (msg.All)

{

RevertAll();

}

else

{

var lookedAt = GetLookedAtObject();

if (lookedAt != null)

{

lookedAt.Revert(true);

}

}

}

For Drop and Revert, if the “All” property of the message is set, all cubes are dropped (or reverted) and that’s it, the rest works as before. Well kind of – for the actual revert method I now used two LeanTween calls to move the Cube back to it’s original location – the actual code shrank from two methods of about 42 lines together to one 17 line method – that actually has an extra check in it. So as an aside – please use iTween, LeanTween or whatever for animation. Don’t write them yourself. Laziness is a virtue ;).

Conclusion

I will admit it’s a bit contrived example, but the speech recognition is now a thing on it’s own and it’s up to any listener to act on it – or not. My newest application “Walk the World” uses the Messenger quite a bit more extensively and components all over the app communicate via that Messenger to receive voice commands, show help screen, and detect the fact the user has moved too far from the center and the map should be reloaded. These components do not need to have hard links to each other, they just put their observations on the Messenger and other components can choose to act. This makes re-using components for application assembly a lot easier. Kind of like in the UWP world.

MVP Profile

MVP Profile

Try my app HoloATC!

Try my app HoloATC!  HoloLens 2

HoloLens 2

Magic Leap 2

Magic Leap 2

Meta Quest

Meta Quest

Android phones

Android phones

Try my app Walk the World!

Try my app Walk the World!  Buy me a drink ;)

Buy me a drink ;)

BlueSky

BlueSky

Mastodon

Mastodon

Discord: LocalJoost#3562

Discord: LocalJoost#3562